Note: You will see an advertising banner beneath our videos that play off the Brighteon platform (when they are not maximised). This advertising helps support the Brighteon platform that doesn't charge subscribers for their content, is committed to free speech, yet is also respectful of copyright-related law. We'd like to clarify that no advertising revenue from Brighteon is received by the Alliance for Natural Health Intl.

By Robert Verkerk PhD, founder, executive and scientific director

On 19 March 2020, the UK downgraded the status of COVID-19, no longer classifying it as a ‘high consequence infectious disease’ (HCID). This was even before the reported mortality rate for ‘deaths involving Covid’ started escalating. It was at the time that the government, academics, the National Health Service (NHS) and the public were worried that the NHS capacity to handle critically ill patients would be overrun. That was back then.

Now we’re seeing rising cases – referred to widely as a ‘surge’ in infections – and government machines are working hard to prepare the public for more restrictions.

If you’re in the UK – publicly protesting against such measures has become tougher as of the beginning of the week when prime minister Boris Johnson instigated – against the will of all but two ministers his ‘rule of 6’.

Our question of the week

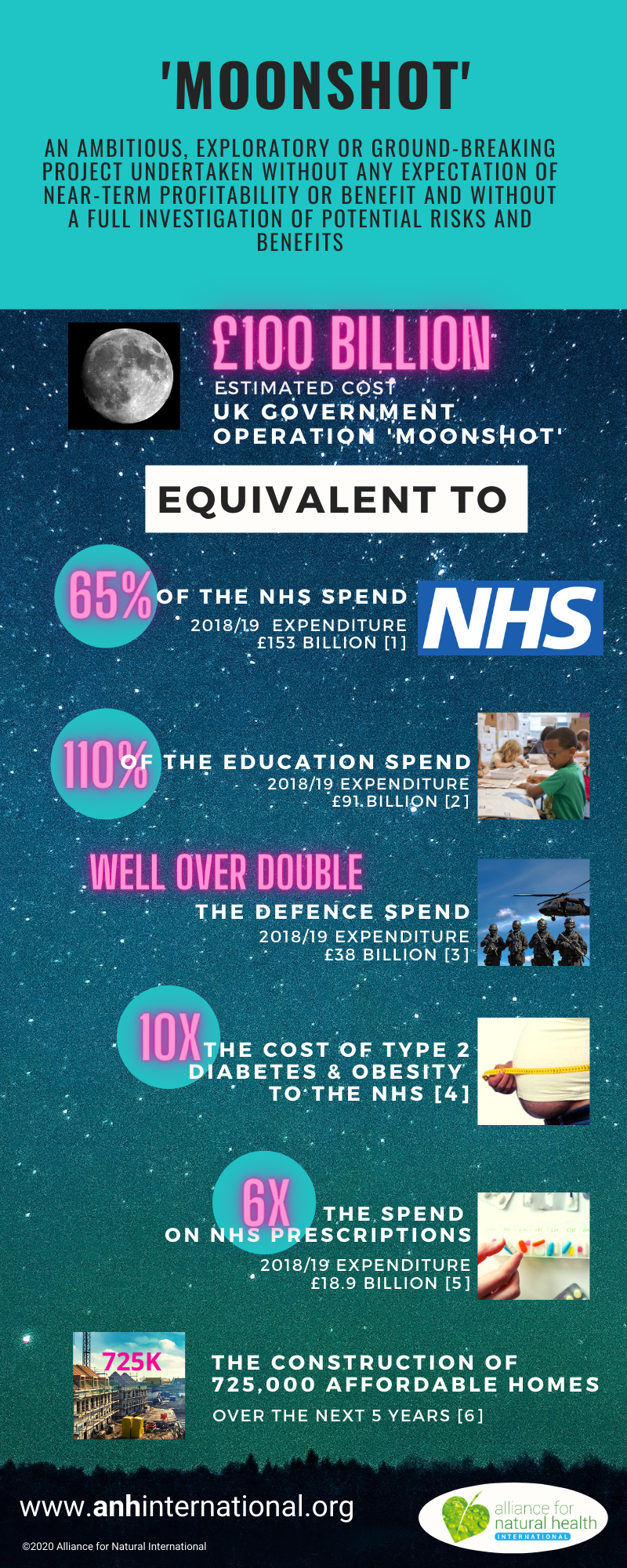

The question we want to pose to the British people this week is this: given the current status of COVID-19 disease as well as the precarious state of the UK economy, is Boris Johnson’s £100 billion plus proposed investment in ‘operation moonshot’ coronavirus testing programme appropriate? Is it the best way of getting back to some semblance of normal life and resurrecting the economy?

This kind of investment needs to be considered in the context of a country that is expected to suffer an 11.5% slump in national income (gross domestic product; GDP) this year according to the Organisation for Economic Cooperation and Development (OECD), worse than any other developed country. This kind of economic slump should be compared with the typical 6% slump in GDP during 1918-21 owing to the Spanish flu that wiped out a staggering 2.1% of the global population.

Spanish flu 1918 (Source: Wikimedia Commons)

This isn’t a rhetorical question. It’s a question that we hope triggers critical thinking among us, the citizens and residents of a country that is by the end of this week closing its short public consultation on its plans to change UK medicines law to prepare the way for mass vaccination of the population. Testing and vaccination might seem like natural bedfellows – testing ostensibly telling you whether or not you’re infected in the absence of any available treatment, vaccination (once available) providing insurance against infection in the future. But could they also be devices designed to achieve something quite different, that’s not really in our interest, but more in the interests of those controlling the shots (pun intended)?

Testing troubles

The trouble is that RT-PCR tests on which ‘operation moonshot’ is based aren’t accurate. This problem is complicated by the lack of a ‘gold standard’, and known, significant variations in sensitivity and specificity. If that wasn’t bad enough, there are many other sources of variation as well, that include cross-reaction with other genetic material, timing of tests, potential contamination and sample degradation.

If you felt so inclined, you can use the BMJ’s ‘Covid-19 test calculator’ to work out the percentage of people likely to have false and true positives and negatives according to different pre-test probabilities of infection, sensitivities and specificities of test. Generic test calculators such as this medical test calculator can also be used. Assuming an 80% pre-test probability (i.e. the best estimate of the actual prevalence of the disease in a given area or population), 70% sensitivity and 95% specificity, the calculators show you that for those who receive a negative test result (i.e. the majority), 56% are actually likely to be infected (as compared with 80% if the tests had both 100% sensitivity and specificity).

The trouble is that the precision declines as the prevalence of the disease reduces. So, in the above example, if you substitute the 80% pre-test probability for 1% (still around 10 times more than the current data based on ONS data), you find that the probability of being infected if you have a positive test result is only 16%. In other words, precision (or 'positive predictive value') declines dramatically as prevalence goes down.

So at 95% sensitivity and 95% sensitivity and a 1% prevalence (pre-test/clinical probability) level, only 49% of those who receive a positive test would actually be likely to be infected. Play with your calculators yourself. Their downside is that they don't go below 1% and actual prevalence for most parts of the world are now much lower than 1%. Keen mathematicians can use he actual formulae to determine positive and negative predictive values from Altman & Bland (1994).

All of that's assuming the tests are taken n the right way and at the optimal time. This all means that precision in the real world can be much lower than under perfect lab conditions.

Also, even when manufacturer claimed sensitivity and specificity are much higher than shown in the above example, the precision can be very low when prevalence is low.

Put another way, if you could be 100% sure that someone had Covid-19 as a result of confirmed infection with SARS-CoV-2 (which is almost never the case because lab-confirmed cases are based on inaccurate PCR tests, meaning this is more of a theoretical notion), for every 100 people who are infected, 30 would be missed. This means there's a 30% failure rate where you can guaranteed infection or a 57% failure rate if you're 80% sure someone is infected, which is a more realistic scenario.

Would you make one of the biggest investments of your life in some unproven technology that had more than a 30 to 50% failure rate? Especially without asking those who’d contributed to your wealth (taxpayers) if they thought this was a good idea?

From case rate to positivity rate

Last week we discussed the emergence of the ‘casedemic’ – the change in the narrative around Covid-19 that now rarely discusses daily death rates, and instead focuses the public eye on cases. This week, to aid your critical thinking, we add another metric that helps you look at test results. It’s the positivity rate. In the context of Covid-19, it’s quite simply the percentage of those tested who test positive, based on RT-PCR tests.

Like all metrics, it has its limitations, because it depends on who’s getting tested. With a scientific hat on, the results we see for Covid-19 are limited by the fact that the sample of people getting tested aren’t randomised. But it’s still a very useful relative metric, that tells you a lot about the progression of an epidemic, much more so than simply the number of cases, something the media has been trying to keep our eyes focused on.

We also talked last week about another useful metric, one linked to mortality, the infection fatality ratio or IFR. But given that so few people appear now to be dying of Covid-related causes, it’s important to get a handle on the proportion of positive tests found among those tested using albeit inaccurate RT-PCR testing. Enter the positivity rate. The metric has been given a lot more airtime in the other countries, such as the USA and Australia. It’s not used widely in the UK, the country that hosts one of the leading vaccine contenders in the Oxford/AstraZeneca vaccine.

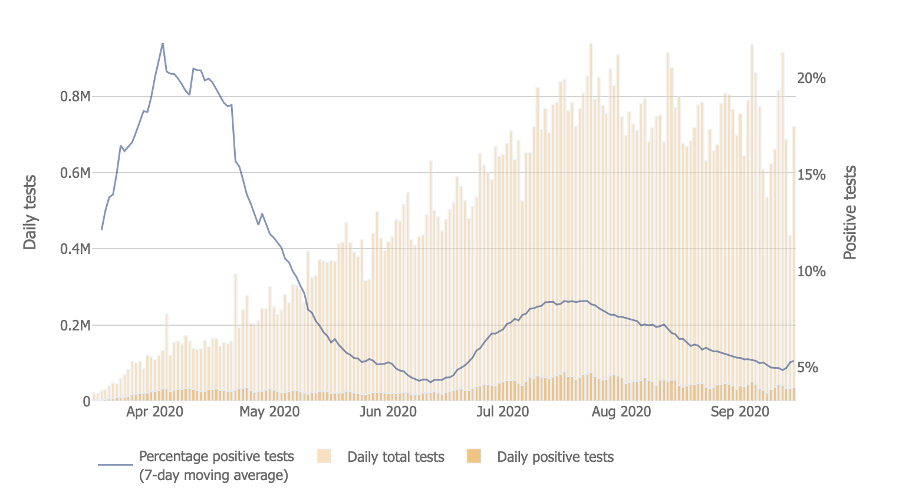

On 12 May 2020, the World Health Organization (WHO) somewhat arbitrarily advised governments that before reopening economies and removing restrictions, the positivity rate should be below 5% for at least 14 days. Currently in the USA, around half the states (25) are below this level, half (26) above. This puts the USA, nationally, a fraction over the 5% positivity rate (5.1%) in week 36 (first week of September).

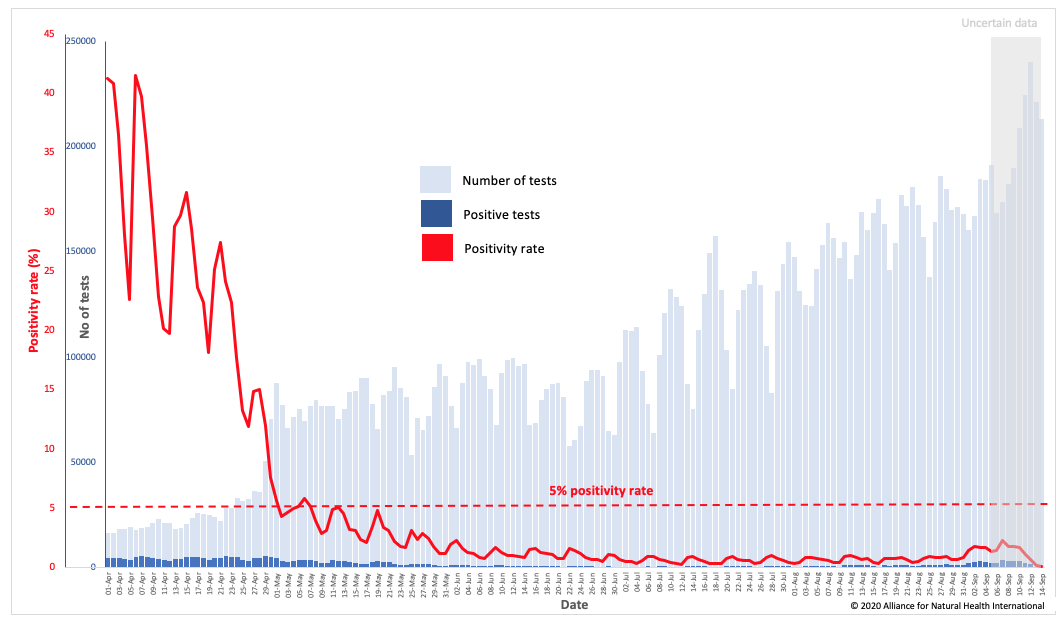

In the UK, we’ve calculated the 7-day moving average for the positivity rate based on data from the UK government dashboard at just 0.7 (that’s over 7 times less than the WHO arbitrary threshold of 5% (see Figure 1 below). We’ve included the US data (Figure 2) below for reference; among the reasons for the high figures in the US is the fact that the epidemic wave struck the southern states significantly later.

Fig 1 Positivity rate trend for the UK (Data source: GOV.UK; data analysis and graphics by Alliance for Natural Health International)

Fig 2 Positive rate trend for USA (Source: Johns Hopkins Coronavirus Resource Center)

Back to the big question

With a bit of this additional food for thought, let’s get back to the question: Should Brits be investing such a vast – yes, an eye-watering £100 billion – a figure that is on par with or in excess of the UK education spend, to give just one example – without any recourse to the view of citizens or their elected representatives?

If it helps, you might also want to consider some other questions, such as: Is Boris Johnson’s new ‘Rule of 6’, that was apparently ushered in against the will of every minister other than Matt Hancock and Michael Gove, all part of a crony capitalism revival? One that’s being steered through with Boris Johnson’s hand firmly on the tiller of the United Kingdom?

Think about it.

We've got two closely related questions we'd love you to answer via Twitter poll:

Question 1

Do you support the £100bn ‘operation moonshot’?

— ANH International (@anhcampaign) September 17, 2020

More info at: https://t.co/OspaHNf17S

Question 2

Should Parliament be consulted before the UK government invests £100bn in 'operation moonshot'? More info at: https://t.co/OspaHNwBZq

— ANH International (@anhcampaign) September 17, 2020

Infographic references:

[2] Institute for Fiscal Studies

[4] NHS England

[5] NHS Digital

Comments

your voice counts

17 September 2020 at 9:27 am

Rob - excellent piece thanks. We have been writing about the major problems with the RT -PCR test as a measure of 'cases' - what is actually happening is a 'postive test' spike which is not the same thing at all - and yet being used to drive policy. This could theoretically be used to keep various forms of lockdown in place indefinitely. (our blog https://covidwatching.org)

One question I have is regarding this: "Assuming an 80% pre-test probability, 70% sensitivity and 95% specificity, the calculators show you that for the majority who have receive a negative test result, 57% are actually likely to be infected." Does this not then suggest that the number of people who are infected is actually 57% higher than reported, which while it argues that the RT-PCR test is not reliable and hence the spending is beyond ludicrous, it seems to argue against your original point. What about the number of 'positive' cases that are not infected. What am I missing?

17 September 2020 at 1:13 pm

Carol - thanks for the kind words. Re your question, with the scenario I depicted, no, it doesn't mean there's 57% more infected. What I was depicting is a realistic scenario in which you would expect 80% to have a true positive (because of 80% pre-test probability) but you only find 57% have a true positive. Because what I had written was clearly not clear enough, I've explained it further. Here is the extract in the modified version that I hope makes it clearer:

"Assuming an 80% pre-test probability (i.e. the best estimate of the actual prevalence of the disease in a given area or population), 70% sensitivity and 95% specificity, the calculators show you that for those who receive a negative test result (i.e. the majority), 57% are actually likely to be infected (as compared with 80% if the tests had both 100% sensitivity and specificity). Put another way, if you could be 100% sure that someone had Covid-19 as a result of confirmed infection with SARS-CoV-2 (which is almost never the case because lab-confirmed cases are based on inaccurate PCR tests, meaning this is more of a theoretical notion), for every 100 people who are infected, 30 would be missed. This means there's a 30% failure rate where you can guaranteed infection or a 57% failure rate if you're 80% sure someone is infected, which is a more realistic scenario. The trouble is that the precision declines as the prevalence of the disease reduces. So, in the above example, if you substitute the 80% pre-test probability for 1% (still around 10 times more than the current data based on ONS data), you find that the probability of being infected if you have a positive test result is only 16%. IN other words, precision (or 'positive predictive value') declines dramatically as prevalence goes down.

So at 95% sensitivity and 95% sensitivity and a 1% prevalence (pre-test/clinical probability) level, only 49% of those who receive a positive test would actually be likely to be infected. Play with your calculators yourself. Their downside is that they don't go below 1% and actual prevalence for most parts of the world are now much lower than 1%. Keen mathematicians can use he actual formulae to determine positive and negative predictive values from Altman & Bland (1994)."

Let us know if this makes sense now!! Keep up the great work with covidwatching.org. Free expression is soooo important when there is so much confusion out there and so many trying to manipulate or censor views! Best, Rob

17 September 2020 at 9:37 am

The more you test, the more 'cases' not illnesses you will discover.

This brings me to an important question - How are the 'case' numbers rising when the tests are not available to many people?

We have heard that test centres are turning people away because they have no staff and no test kits available. How then are the 'cases' rising?

To spend a 100 million pounds on tests that have been proved to be at best unreliable and at worst fraudulent seems to be another knee jerk reaction from a government that has run this pandemic from the 'back of a fag packet'!

The problem with testing is not new, as far back as April only frontline workers were being tested, now not even frontline workers can get their tests done.

Testing has one major flaw, a test can be negative on one day, test again a few days later and a positive result may occur, either because that person has come into contact with an infected person or the test istself has been conducted in a different manner. So are we to have a test a week, or another test a few days after the first one, in which case we will be tested regularly because according to this government the numbers are rising so rapidly.

I wonder how many government officials have shares in the testing kit and PPI manufacturing companies?

This 'plandemic' is part of the global reset and the sooner people realise that the looming vaccination programme is not about health, it is about money and so global governments have to get the numbers of 'cases' up in order to justify the mandatory roll out of an untested and so far unlicensed vaccine.

Boris obviously thinks that to spend 100 million to achieve that is money well spent!

Thank you so much Robert and the ANH for keeping us so informed that we feel we really do have a voice!

17 September 2020 at 1:17 pm

Thanks Pauline - we do our best using our 'good science' and 'good law' approach! Note: If it were £100 million Boris wanted to spend on operation moonshot, it might be slightly easier to justify - but it's one thousand times this amount - £100 billion!!! PS we've added a Twitter poll now to base of article!

17 September 2020 at 1:29 pm

Sorry Robert! I had realised that just after I hit the post button!

17 September 2020 at 11:16 am

Testing being rolled out.

I drive to town today passing the Army's testing station.

I get a test. (Possibly producing false positive/negative for any coronavirus.)

I go to Tesco and contract COVID-19 from a passerby.

How long will the test keep me "safe"? Can COVID-19 read and say "oh cool, he's had a test - that's ok then, we'll leave him alone"?

Does the test I had while abroad in March still protect me?

Complete waste of time and money.

17 September 2020 at 1:33 pm

Hi Quent - you raise the very important equation of how long after infection will a PCR test show positivity.That depends on incubation period and we know that varies been 1 and 11 days, probably averaging around 5 days. So even if you went to test centre the day after getting infected in the Tesco aisles it be quite unlikely you'd get a positive test result. And no - your March test will be even more meaningless now than it was! We're waiting for a day when authorities start to rejoice and getting evidence of rising infections without serious disease and death - that's the best signal for a waning epidemic! Trouble is - as you know - they won't want to admit it - there's too much at stake maintaining the hype about it. Stay well, Rob

17 September 2020 at 12:25 pm

This is lunacy, power gone mad!

.

Sociopaths' and their belief that they are always right! and covering themselves. with many blood tests that are known by scientists, medical profession and others to be fallible.

Westminster Government?

As always Rob, you give facts and figures to support your well written brief assessment.

Sweden, Taiwan and other countries have proved that the UK, USA & other countries management of this FLU, have not worked as well their scientific and common-sense approaches and working with their Politicians.

Yes, I am sure that the overall health of Swedish people would be more positive than the UK population, their are many variables both in an individual and their environments. But as we know in the History of mankind that "bugs" are in the atmosphere and the vast majority of individual human beings Immune systems cope so very well, and have when one considers the food, chemicals. numerous injections for babies, stressors, modern medicine/drugs, and so much else.

Sweden gave attention to their more susceptible members of society, whose Immune systems were already compromised. Britain ??

I see Boris as being a carbon copy of so many Politicians' in the World for the last 30+ years, the thinking that you just throw money at whatever the problem is, instead of quietly looking at the root cause(s) in every area. And their appears to be plenty of data available, and highly qualified Scientists' to look at, but again, we have cover ups and misdirecting valid information - called corruption, egos and power/control and lack of the characteristics so needed at this time especially, to give Leadership.

17 September 2020 at 1:52 pm

Thanks for commenting Deidre. We hope you're well. As always you get to the nub of the matter!

Warm wishes

Melissa

17 September 2020 at 12:28 pm

Thank you for this piece. Great, important facts and figures (as usual) and shared widely (from me anyway). Why this isn't more readily available to the public is mind-blowing but unsurprising - the plan all along it seems is leading us to widespread vaccination programmes. When power is used and abused for the profit of the few and actually harming the mass, that's when action needs to be taken.

17 September 2020 at 1:53 pm

Hi Nathalie. We're glad you enjoyed our article. Thank you for sharing our work, it's much appreciated and a great way to circumvent to censorship that's going on currently.

Warm regards

Melissa

17 September 2020 at 6:58 pm

If you are aware that a reset of the global social, economic - (and replacement of any political process )- with systemic controls) is operating under pretext of pandemic, then money as we thought we knew it, is also switching to a digital 'credit system' in which helicopter money enables provisional access to goods and services, for compliance.

'Get rich, be a snitch' is an overstatement, but may seem true relative to social exclusion and shame.

So the 'raiding' or redistributing such wealth as can be leveraged is operating in the same manner as making the most of a crisis in terms of increasing control and withholding or removing rights. Or riding the bandwagon effect, for where the fear is flagged to, the funding follows.

But there is another take on this, and that is to discern an insane script winding up to its own logical conclusion.

The shift from a locked-down and protected sense of self-separateness running fear and control, but masked in virtue, to a transparency to the movement of being that gives rise to a relational expression, interaction and appreciation, is as I see it, marked by looking upon the 'foundation' beneath the mind of fear and control, to immediately see it is NOT there.

That capacity for fear to operate as belief and become locked in by action and reaction, is for belief to run in place of an inner knowing - which is directly and presently felt connection.

The practical of this is a capacity and willingness to live from a contextual or relational field of awareness rather than operate as a disconnected pathogenic fear, with no power of our own but what we can mask in so as to feed off the life that we think to manipulate to private agenda that once symbolised something real that we longed for from a sense of lack, deprivation or denial.

The full post continues at

https://willingness-to-listen.blogspot.com/2020/09/boris-on-moonshine.html

17 September 2020 at 7:23 pm

Basically, the whole thing amounts to a farce because all the ridiculous measures taken worlwide (with the exception of Sweden) are based on a fallacy, which is the germ theory assumption that we are dealing with a disease and that viruses are pathogens. Nothing could be further from the truth, but the people in charge are scientific dinosaurs unfamiliar with what is called New Biology. Viruses und exosomes are benign. They are not our enemies, but messengers tasked with our genomic updating. The latter has been triggered by man-made air pollution in the form of fumes, toxins, chemicals, insecticides, pesticides, herbicides, glyphosate, plastic materials, radiation etc. and the purpose of the genomic updating or adjustment is to provide us with the required protection, i.e. it is exactly the opposite of what is being assumed by the authorities. Consequently, all new "infections" are triggered by air pollution and should be welcomed as the pandemic will disappear as soon as everybody has been adjusted. The virus or exosome (which is not alive) per se is harmless, but when it binds with certain types of air pollution the latter - and not the virus - can become dangerous. This is particularly so in the case of the carbon particulate PM 2.5, wich contains cyanide. In such a case coronavirus sufferers have to be treated for the symptoms caused by the air pollution (not by the virus). In cases of hypoxia caused by PM 2.5 because of its cyanide content the treatment must be for cyanide poisoning, which is easy, cheap and virtually always successful. PM 2.5 seems to be no longer present in European countries, which explains why the fatality rate in Europe has now been zero for some time. Please watch the interviews with Dr. Zach Bush, the most brilliant medical scientist on the planet, who explained all this at the very beginning of the pandemic, but has been consistently ignored by the authorities and the media for unknown reasons. It simply beggars belief that origin, nature and handling of this crisis were practically known from the outset, but have been deliberately ignored by the media and the people who govern us.

11 October 2020 at 11:05 am

"... in the absence of any available treatment,"

.

surprised to see this homogeneous statement in anh❗

.

medicalHerbalists are treating thousands with antiVirals, both as preventives and as holistic treatments for patients exhibiting symptoms

.

please don't muddle failures of conventional'Medicine', with the Stirling work of trueHealth professionals

🤔... 🤔

Your voice counts

We welcome your comments and are very interested in your point of view, but we ask that you keep them relevant to the article, that they be civil and without commercial links. All comments are moderated prior to being published. We reserve the right to edit or not publish comments that we consider abusive or offensive.

There is extra content here from a third party provider. You will be unable to see this content unless you agree to allow Content Cookies. Cookie Preferences